Bark TTS Complete Guide

Introduction

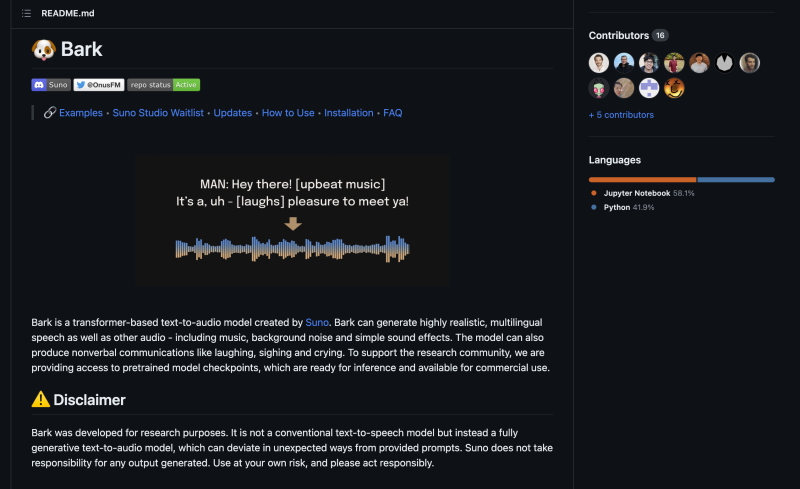

Bark, a state-of-the-art text-to-audio model, has been innovatively developed by Suno, leveraging transformer-based technology. This groundbreaking model distinguishes itself by its ability to generate highly realistic, multilingual speech, making it an exceptional tool in global communication and artificial intelligence. Beyond mere speech synthesis, Bark's capabilities extend to the creation of diverse audio experiences, including music composition, background noise generation, and the production of simple sound effects, thereby enhancing its utility in various multimedia and entertainment applications.

What sets Bark apart is its proficiency in emulating nonverbal communications such as laughing, sighing, and crying. These elements add a layer of emotional depth and realism to the audio outputs, making them more relatable and lifelike. This feature is particularly beneficial in creating more natural interactions in virtual assistants, gaming characters, and other digital avatars.

To bolster innovation and further research in this field, Suno has made a commendable decision to support the research community. They are providing access to pretrained model checkpoints that are ready for inference. This open access enables researchers, developers, and enthusiasts to explore, experiment, and advance the capabilities of audio synthesis technology. By doing so, Suno not only showcases its commitment to the advancement of AI but also fosters a collaborative environment for future breakthroughs in the realm of audio synthesis.

Supported Languages

The Bark text-to-audio model developed by Suno supports multiple languages. It has the capability to automatically determine the language from the input text. When prompted with text that includes code-switching, Bark tries to employ the native accent for the respective languages. Currently, the quality of English generation is noted as being the best, but there is an expectation that other languages will improve with further development and scaling.

It's important to note that specific details about the exact number of languages supported or a list of these languages are not explicitly mentioned in the available documentation. However, the model's ability to recognize and generate audio in various languages automatically suggests a wide range of multilingual support.

Features

Bark, developed by Suno, is an advanced text-to-audio model that boasts a wide array of features. These features are primarily designed to enhance the capabilities of audio generation in various contexts, from simple speech to complex audio environments. Here's an extensive overview of Bark's features:

- Multilingual Speech Generation: One of Bark's most notable features is its ability to generate highly realistic, human-like speech in multiple languages. This multilingual capacity makes it suitable for global applications, providing versatility in speech synthesis across different languages. It automatically detects and responds to the language used in the input text, even handling code-switched text effectively.

- Nonverbal Communication Sounds: Beyond standard speech, Bark can produce nonverbal audio cues such as laughter, sighing, and crying. This capability enhances the emotional depth and realism of the audio output, making it more relatable and engaging for users.

- Music, Background Noise, and Sound Effects: Apart from speech, Bark is also capable of generating music, background ambiance, and simple sound effects. This feature broadens its use in creating immersive audio experiences for various multimedia applications, such as games, virtual reality environments, and video production.

- Voice Presets and Customization: Bark supports over 100 speaker presets across supported languages, allowing users to choose from a variety of voices to match their specific needs. While it tries to match the tone, pitch, emotion, and prosody of a given preset, it does not currently support custom voice cloning.

- Advanced Model Architecture: Bark employs a transformer-based model architecture, which is known for its effectiveness in handling sequential data like language. This architecture allows Bark to generate high-quality audio that closely mimics human speech patterns.

- Integration with the Transformers Library: Bark is available in the Transformers library, facilitating its use for those familiar with this popular machine learning library. This integration simplifies the process of generating speech samples using Bark.

- Accessibility for Research and Commercial Use: Suno provides access to pretrained model checkpoints for Bark, making it accessible for research and commercial applications. This open access promotes innovation and exploration in the field of audio synthesis technology.

- Realistic Text-to-Speech Capabilities: Bark’s text-to-speech functionality is designed to produce highly realistic and clear speech output, making it suitable for applications where natural-sounding speech is paramount.

- Handling of Long-form Audio Generation: Bark is equipped to handle long-form audio generation, though there are some limitations in terms of the length of the speech that can be synthesized in one go. This feature is useful for creating longer audio content like podcasts or narrations.

- Community and Support: Suno has fostered a growing community around Bark, with active sharing of useful prompts and presets. This community support enhances the user experience by providing a platform for collaboration and sharing best practices.

- Voice Cloning Capabilities: While Bark does not support custom voice cloning within its core model, there are extensions and adaptations of Bark that include voice cloning capabilities, allowing users to clone voices from custom audio samples.

- Accessibility and Dual Use: Suno acknowledges the potential for dual use of text-to-audio models like Bark. They provide resources and classifiers to help detect Bark-generated audio, aiming to reduce the chances of unintended or nefarious uses.

How to Setup Bark in Python

To use the Bark text-to-audio model via Hugging Face, you will need to follow a few steps to set up and run the model for generating audio from text. Bark, developed by Suno, is a transformer-based model capable of producing realistic, multilingual speech, music, background noises, simple sound effects, and even nonverbal communications like laughing, sighing, and crying. Here's a comprehensive guide on how to use it:

Installation and Setup

- Install the Transformers Library: Bark is available in the Transformers library from version 4.31.0 onwards. You'll need to install the library along with

scipyusing pip:

pip install --upgrade pip

pip install --upgrade transformers scipy

2. Install Bark: Avoid using pip install bark as it installs a different package. Instead, install Bark directly from the GitHub repository:

Generating Audio

Basic Usage

- Preload Models: First, you need to download and load all models:

from bark import SAMPLE_RATE, generate_audio, preload_models

preload_models()

2. Generate Audio from Text: Provide a text prompt and use the generate_audio function to create an audio array:

text_prompt = "Hello, my name is Suno."

audio_array = generate_audio(text_prompt)

3. Save or Play Audio: You can save the generated audio to a file or play it directly in a notebook:

from scipy.io.wavfile import write as write_wav

from IPython.display import Audio

# Save to file

write_wav("output.wav", SAMPLE_RATE, audio_array)

# Play in notebook

Audio(audio_array, rate=SAMPLE_RATE)

Advanced Features

- Multilingual Support: Bark can automatically detect and generate speech in various languages from the input text.

- Music Generation: Bark can also create music. Enclose your lyrics with music notes to guide the model towards generating music.

- Voice Presets: There are over 100 speaker presets across supported languages. You can specify a voice preset in the

generate_audiofunction. - Long-form Audio Generation: Bark is capable of generating longer audio sequences, though there's a practical limit to the length of continuous speech it can synthesize in one go.

Using Bark with Hugging Face Transformers

- Instantiate the Pipeline: Use the text-to-speech pipeline from the Transformers library with the Bark model:

from transformers import pipeline

pipe = pipeline("text-to-speech", model="suno/bark-small")

2. Generate Audio: Pass the text to the pipeline for audio generation:

text = "Your text here"

output = pipe(text)

3. Play the Audio: In a Jupyter notebook, you can play the audio using:

from IPython.display import Audio

Audio(output["audio"], rate=output["sampling_rate"])

Optimization Techniques

Bark offers several optimization techniques to improve performance and efficiency, such as using half-precision floating points (fp16), CPU offload to manage GPU memory usage, and kernel fusion via Better Transformer for faster processing.

Conclusion

Bark, developed by Suno and integrated with Hugging Face's Transformers library, stands out as an exceptionally versatile and advanced tool in the field of audio generation. It opens up a world of possibilities, transcending traditional boundaries in various domains such as virtual assistants, audiobooks, and creative multimedia projects.

Key Aspects of Bark's Versatility and Applications:

- Multilingual Capabilities: Bark's proficiency in generating realistic speech in multiple languages is a game-changer. This feature makes it highly suitable for global applications, offering a seamless experience in creating content for diverse linguistic audiences.

- Creative Flexibility: Beyond standard speech synthesis, Bark excels in producing music, background noises, and sound effects. This allows creators, musicians, and sound engineers to explore new creative horizons, experiment with different sounds, and bring their artistic visions to life in unique ways.

- Nonverbal Sound Generation: The ability to generate nonverbal sounds such as laughter, sighs, and crying adds an emotional depth to audio content, making it more engaging and lifelike. This feature enhances user experience in storytelling, gaming, and interactive media.

- Easy Accessibility and Integration: For those with a background in Python and machine learning, integrating Bark with the Transformers library is straightforward. This ease of access lowers the barrier to entry for developers and researchers, allowing them to leverage Bark's capabilities without extensive training in audio processing.

- Virtual Assistants and Audiobooks: Bark's realistic speech generation is particularly beneficial for developing virtual assistants that require natural-sounding, responsive voice interactions. In the realm of audiobooks, it offers an innovative approach to narrate stories, potentially revolutionizing the way we consume literary content.

- Educational and Accessibility Tools: Bark's text-to-audio conversion can be a powerful tool in educational settings and for creating accessibility tools for the visually impaired. It can transform written material into audio format, making information more accessible to a wider audience.

- Customization and Voice Presets: With over 100 speaker presets across various languages, users have the flexibility to customize the voice to fit the specific needs of their projects. This customization enriches the user experience by providing a wide range of tonal qualities and accents.

- Research and Development: Suno’s decision to provide access to pretrained model checkpoints for Bark significantly contributes to research in AI and audio synthesis. It fosters an environment for experimentation and innovation, encouraging advancements in the field.

- Optimization Techniques: Bark comes with various optimization techniques, like using half-precision floating points and CPU offload, to enhance performance and efficiency. This makes it suitable for use in environments with limited computational resources.

In conclusion, Bark by Suno, with its integration in Hugging Face's Transformers library, represents a significant advancement in audio generation technology. Its versatility, ease of use, and wide range of applications make it an invaluable asset in various fields, from entertainment and education to accessibility and beyond. The potential uses of Bark are as vast as the creativity of those who employ it, making it a pivotal tool in the ongoing evolution of audio technology and AI applications.

For more detailed instructions and advanced features, refer to the Bark documentation on Hugging Face and GitHub.