Clap: Complete Guide 2024

Introduction

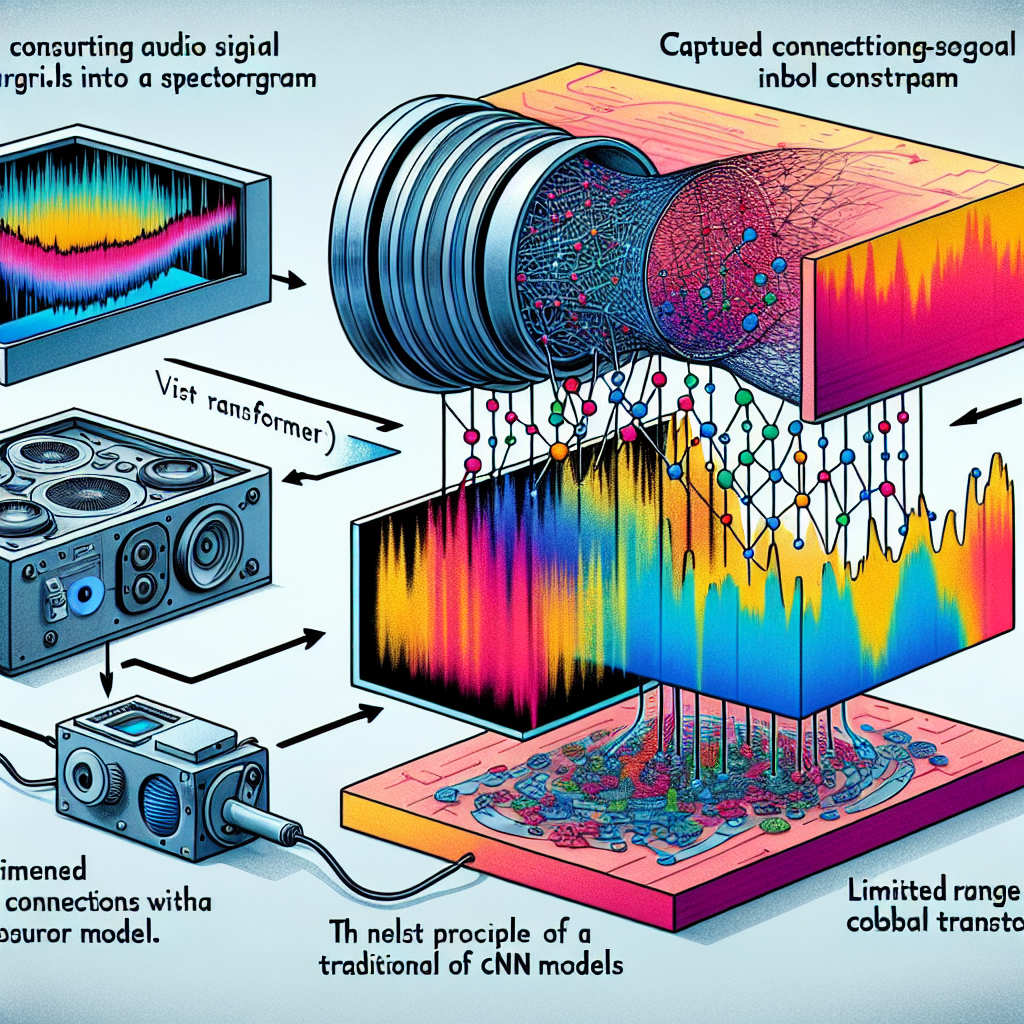

The intersection of artificial intelligence and audio processing has unveiled remarkable innovations that are transforming how machines understand and interact with the world through sound. Among the most notable advancements in this domain is the development of the Audio Spectrogram Transformer (AST), a model that has redefined the benchmarks for audio classification tasks. Drawing inspiration from the Vision Transformer, a model renowned for its impact on image processing, AST adapts and extends these principles to the realm of audio data. By converting audio into a spectrogram, essentially treating it like an image, AST leverages the power of attention mechanisms to unearth patterns within the audio signals that were previously difficult to capture. This introduction elaborates on the inception, architectural innovation, and benchmarking excellence of the AST model, setting the stage for an in-depth discussion on its capabilities and transformative impact on audio classification.

The Inspiration Behind AST

The genesis of the Audio Spectrogram Transformer model is rooted in a reevaluation of the prevailing methodologies in audio classification. For years, convolutional neural networks (CNNs) have been the cornerstone of audio classification models, tasked with learning direct mappings from audio spectrograms to their corresponding labels. Despite their successes, the reliance on CNNs posed limitations, particularly in capturing the long-range dependencies within audio data. This prompted a pivotal question: Could a model, devoid of convolutional layers and solely based on attention mechanisms, not only match but potentially exceed the performance of CNNs in audio classification? AST emerged as a resounding affirmation to this inquiry, challenging conventional approaches and spearheading a new direction in audio processing research.

AST's Architectural Innovation

Diverging from the traditional reliance on convolutional layers, the AST model is built on a purely attention-based architecture. This design choice is instrumental in AST's ability to process audio data, allowing it to capture long-range global context effectively. The model's architecture, devoid of any convolutional operations, leverages self-attention mechanisms to analyze the spectrogram representations of audio signals. This section will delve into the intricate details of AST's architecture, exploring the components that contribute to its unprecedented ability to discern and classify audio patterns with remarkable precision.

Benchmarking Excellence

The efficacy of the AST model is not merely theoretical but is substantiated by its performance across various audio classification benchmarks. AST has set new state-of-the-art records on renowned datasets such as AudioSet, ESC-50, and Speech Commands V2. Its achievements in these benchmarks are not incremental but represent significant leaps in performance, showcasing the model's superior classification capabilities. This section will provide a detailed examination of AST's performance metrics across different benchmarks, offering insights into its efficiency and the impact of its architectural innovations on audio classification tasks.

Overview

The Audio Spectrogram Transformer (AST) ushers in a new era for audio classification, offering a novel approach that diverges significantly from traditional methodologies. Conceptualized by Yuan Gong, Yu-An Chung, and James Glass in their seminal paper, "AST: Audio Spectrogram Transformer," this model transcends conventional boundaries by applying the principles of Vision Transformers to audio data. By transforming audio signals into spectrogram images, the AST achieves superior performance in classifying a wide array of audio types, setting new benchmarks in the field.

AST Model Insights

Over the last decade, convolutional neural networks (CNNs) have become the backbone of audio classification systems, praised for their ability to map audio spectrograms to their corresponding labels efficiently. However, the emergence of self-attention mechanisms, when combined with CNNs, has led to a paradigm shift, emphasizing the need to capture long-range global contexts within audio data more effectively. This evolution sparked a debate on the necessity of CNNs and the potential of purely attention-based networks in achieving high levels of audio classification performance.

The AST model emerges as a revolutionary response to these questions, marking a departure from CNNs by relying exclusively on attention mechanisms. This shift is evidenced by its impressive performance across various benchmarks, including a 0.485 mean Average Precision (mAP) on AudioSet, 95.6% accuracy on ESC-50, and an astounding 98.1% accuracy on Speech Commands V2. Such results not only highlight the model's efficacy but also its pioneering status in the field.

Architectural Highlights

Distinguished by its unique approach, the AST model converts audio into spectrogram images, leveraging the robust capabilities of Vision Transformer architecture, tailored for audio analysis. This strategic adaptation allows the AST to detect and interpret complex audio patterns with remarkable precision, thereby achieving state-of-the-art results in audio classification tasks. The model's architecture is a testament to the innovative fusion of audio and visual processing techniques.

Practical Usage Tips

To fully harness the capabilities of the Audio Spectrogram Transformer in your projects, adherence to specific implementation guidelines is paramount. Input normalization is essential, with inputs requiring a mean of 0 and a standard deviation of 0.5. The ASTFeatureExtractor facilitates this preprocessing, utilizing default parameters derived from AudioSet. Moreover, the AST's performance is highly dependent on the learning rate; a rate substantially lower than that used for CNN models is recommended, alongside an appropriate learning rate scheduler. These practices are crucial for optimizing the model's efficacy and ensuring successful integration into various applications.

Implementation and Integration

Incorporating the AST into your project involves understanding its operational parameters and the environmental setup. Utilizing the official code repository and the Hugging Face Transformers library provides a straightforward pathway to implementation. The ASTConfig class allows for customization of the model's parameters, including the number of attention heads and hidden layers, enabling fine-tuning to specific requirements. For practical application, the ASTFeatureExtractor plays a vital role in preparing audio data for the model, ensuring it is in the optimal format for processing.

from transformers import ASTModel, ASTConfig

# Initialize configuration

config = ASTConfig()

# Instantiate the model with the initialized configuration

model = ASTModel(config)

# Configuration adjustments for specific requirements

model.config.num_attention_heads = 16 # Example modificationThis snippet illustrates the initial steps in configuring and instantiating the AST model for customized application needs. Through such configurations, developers can tailor the model to suit a variety of audio classification challenges.

Limitations

The Audio Spectrogram Transformer (AST), despite setting new benchmarks in audio classification, comes with a set of limitations that present both challenges and opportunities for further research and development. Understanding these limitations is essential for effectively leveraging AST in various applications and for guiding future improvements.

Computational Resources

One of the foremost limitations of the AST model lies in its demand for significant computational resources. Given its architecture that leverages a purely attention-based mechanism, the AST requires substantial memory and processing power. This is particularly pronounced when processing large-scale audio datasets or performing complex audio classification tasks.

# Pseudo code illustrating computational resource requirement

# Required memory and processing power increases with dataset size

dataset_size = large

memory_requirement = dataset_size * factor

processing_power_needed = calculate_processing_power(memory_requirement)This high demand for resources may limit the model's accessibility to individuals or organizations without the necessary computational infrastructure, potentially hindering wider adoption and experimentation.

Data Normalization

The necessity for precise input normalization poses another challenge. The AST model's performance is highly dependent on the input features being normalized to have a mean of 0 and a standard deviation of 0.5. This preprocessing step, crucial for achieving optimal results, introduces additional complexity in dataset preparation.

# Pseudo code for data normalization

for audio_clip in dataset:

normalized_clip = (audio_clip - mean) / std_dev

# Proceed with normalized dataLearning Rate Sensitivity

AST's sensitivity to the learning rate is a critical limitation. Unlike traditional Convolutional Neural Networks (CNNs) used in audio classification, AST requires a much lower learning rate. Identifying the optimal learning rate and scheduler becomes a task of paramount importance, necessitating extensive tuning and experimentation.

Generalization Across Diverse Audio Tasks

Although AST demonstrates exceptional performance in audio classification, its generalizability across a broad spectrum of audio tasks remains less understood. The model's efficacy in areas such as audio generation, source separation, and more complex audio processing tasks is an open question that warrants further investigation.

Training Duration

The training duration of AST, despite its reported quick convergence, can be extensive for achieving peak performance. This aspect may act as a bottleneck in scenarios requiring rapid iterative development or when computational resources are limited.

Adaptation to Real-World Scenarios

AST's application in real-world scenarios faces challenges due to its reliance on high-quality, clean audio datasets for training. The model's performance in noisy, real-world environments where audio data may be of lower quality or contain various background sounds is an area that needs further exploration.

Interpretability and Explainability

Finally, the interpretability of AST models remains a challenge, as is common with deep learning models, particularly those based on transformers. Understanding the decision-making process and what the model "listens" to when making classifications is crucial for trust and reliability, especially in critical applications.

How to Utilize the Model

This section is dedicated to guiding you through the essential steps to effectively utilize the model's capabilities in your projects. Our aim is to provide a structured approach to help you seamlessly integrate this advanced tool, maximizing its potential for your specific needs.

Preparing Your Environment

Initial Setup

Before diving into the technicalities, it's crucial to prepare your working environment. This involves installing the necessary libraries and dependencies, ensuring a smooth integration process. A well-prepared environment is the foundation for effectively working with the model.

- Installation Command:

pip install transformersEnvironment Considerations

- Ensure compatibility of the installed libraries with your system.

- Verify the availability of computational resources, especially if working with large models.

Model Configuration

Understanding Model Parameters

Each model comes equipped with a set of configurable parameters tailored to different tasks and datasets. Familiarizing yourself with these parameters is crucial for customizing the model to best suit your objectives.

Key Parameters to Consider:

- Learning rate

- Batch size

- Number of epochs

Configuring Your Model

- Utilize the

ASTConfigclass to adjust the model settings according to your needs.

from transformers import ASTConfig

config = ASTConfig(hidden_size=768, num_attention_heads=12, num_hidden_layers=12)Loading the Model

Initialization

With the environment and model configuration set up, the next step is loading the model. This involves initializing the model with your specific configuration.

- Example Code:

from transformers import ASTModel

model = ASTModel(config=config)Data Preprocessing

Preparing Your Data

Transforming your raw data into a compatible format is essential for model training. This step is vital for the model's learning process, affecting its ability to understand and generate accurate predictions.

Considerations:

- Normalize audio inputs

- Convert audio to spectrograms if working with audio data

- Tokenize text inputs for NLP tasks

Model Training

The Learning Phase

Training the model is a critical phase where it learns from the processed data. This step involves feeding the data through the model multiple times, optimizing its parameters for better accuracy and performance.

Training Tips:

- Monitor loss and accuracy metrics to gauge progress.

- Adjust training parameters as needed based on performance.

Evaluation and Fine-Tuning

Assessing Model Performance

Evaluating the model's performance post-training is crucial for identifying its strengths and weaknesses. This step helps in pinpointing areas that require further optimization.

Fine-Tuning Strategies:

- Adjust learning rates

- Experiment with different optimizer algorithms

- Incorporate additional training data

Deployment

Bringing Your Model to Production

Deploying the model into a production environment is the culmination of your project, where it begins delivering real-world value. Effective deployment strategies are key to ensuring the model operates efficiently and accurately on new data.

Deployment Considerations:

- Choose an appropriate deployment platform based on your project's scale and requirements.

- Ensure robust security measures are in place to protect your model and data.

Implementing an Audio Spectrogram Transformer in Python

In this expanded guide, we dive deeper into how to implement an Audio Spectrogram Transformer (AST) for audio classification tasks using Python. The walkthrough covers everything from setting up your environment to running inference with real audio data, providing a comprehensive understanding of applying AST in practical scenarios.

Environment Setup

Installing Dependencies

Before diving into the code, it's crucial to set up a proper Python environment. The transformers library by Hugging Face, alongside torch, forms the backbone of our implementation, providing access to pre-trained models and utilities for working with them.

pip install transformers torchImporting Libraries

Once the environment is ready, import the necessary libraries. We'll need ASTConfig and ASTForAudioClassification from the transformers package for our model configuration and classification tasks, respectively. torch is essential for handling tensors, and we'll also introduce librosa for audio processing.

from transformers import ASTConfig, ASTForAudioClassification

import torch

import librosaModel Initialization

Configuring the AST Model

Configuring our AST model is the first step toward audio classification. The configuration includes specifying the model dimensions, such as the size of hidden layers and attention heads. Adjust these parameters based on your specific needs or constraints.

ast_config = ASTConfig(

hidden_size=768,

num_hidden_layers=12,

num_attention_heads=12,

hidden_dropout_prob=0.1,

attention_probs_dropout_prob=0.1

)Instantiating the Model

With our configuration defined, we instantiate the AST model. This model, built upon the Transformer architecture, is particularly adept at capturing the global context of audio data, making it powerful for classification tasks.

ast_model = ASTForAudioClassification(ast_config)Data Preparation

Loading and Processing Audio Data

Before classification, audio data must be adequately prepared. This includes loading audio files, converting them to spectrograms, and then formatting these spectrograms into a structure that our AST model can process. librosa is a handy library for audio processing tasks like these.

import numpy as np

def load_and_process_audio(file_path, sampling_rate=16000):

# Load audio file

audio, sr = librosa.load(file_path, sr=sampling_rate)

# Convert to spectrogram

spectrogram = np.abs(librosa.stft(audio))

return spectrogram

# Example usage

spectrogram = load_and_process_audio("path/to/audio/file.wav")Preparing the Input

Transform the spectrogram into a format acceptable by our model. This might involve resizing the spectrogram and converting it into a tensor that PyTorch can work with.

# Dummy code for demonstration

spectrogram_input = torch.tensor(spectrogram).unsqueeze(0) # Add batch dimensionPerforming Inference

Classifying Audio

Once the input is prepared, it's straightforward to classify audio using our model. The model outputs logits, which we can interpret to obtain the most likely class.

with torch.no_grad():

outputs = ast_model(spectrogram_input)

predictions = torch.argmax(outputs.logits, dim=-1)Conclusion

By meticulously following these steps, you've learned how to leverage the power of an Audio Spectrogram Transformer for audio classification. This guide has not only walked you through the implementation details but also introduced you to essential preprocessing steps for handling audio data.

Conclusion

Embarking on the journey through the dynamic and ever-evolving landscape of audio processing technologies, the Audio Spectrogram Transformer (AST) stands out as a beacon of innovation. This groundbreaking model ingeniously leverages the robust capabilities of the Vision Transformer, applying it to audio data by transforming intricate audio signals into visually interpretable spectrogram images. This novel approach not only redefines benchmarks within the realm of audio classification but also illuminates new pathways for exploration and innovation for both researchers and developers alike.

Unmatched Performance

The AST distinguishes itself by its exceptional ability to capture the nuanced, long-range global contexts inherent in audio data, all without the reliance on conventional convolutional neural networks (CNNs). This marks a pivotal shift from traditional methodologies, affirming the AST's superior performance across a spectrum of audio classification benchmarks. These achievements underscore the model's unparalleled effectiveness and efficiency in understanding and classifying complex audio landscapes.

Pioneering Approach

By championing a purely attention-based architectural framework, the AST boldly challenges established norms, prompting a reevaluation of the necessity and dominance of CNNs within the field of audio processing. The success of the AST illuminates the vast, yet largely untapped potential of attention mechanisms in dissecting and classifying complex audio datasets. This realization opens up new avenues for exploration and underscores the transformative power of attention-based models in advancing our understanding of audio data.

Practical Insights

For practitioners and enthusiasts eager to leverage the AST's formidable capabilities in their projects, attention to detail in areas such as input normalization and learning rate optimization is paramount. The ASTFeatureExtractor emerges as a critical tool in this endeavor, ensuring that input data is meticulously normalized to facilitate optimal model performance. Additionally, the nuanced adjustment of learning rates and schedulers plays a pivotal role in harnessing the full potential of the AST for tailored applications and datasets.

Future Directions

As we stand at the threshold of a new frontier in audio processing, the AST not only significantly enhances our existing toolkit but also serves as a wellspring of inspiration for future innovations. Its versatility and exemplary performance lay the groundwork for continued exploration and advancement in the domains of audio classification and beyond. The journey ahead promises exciting developments, as the AST paves the way for further breakthroughs in our quest to decode the complexities of audio data.