How to Create Smooth, Realistic AI-Generated Videos with AnimateDiff and ST-MFNet on Replicate

Introduction

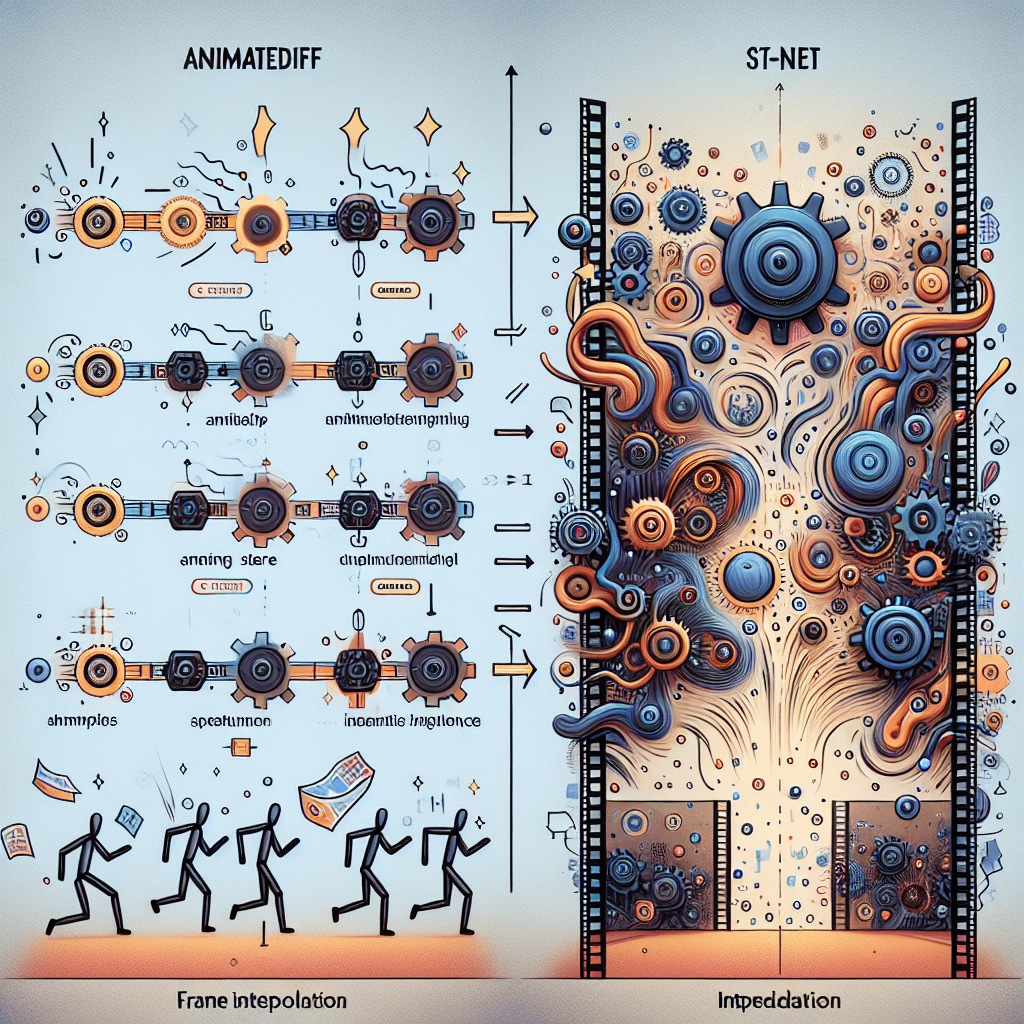

In the rapidly evolving world of digital content creation, the quest for producing lifelike animations from mere text prompts has taken a revolutionary turn. With the advent of advanced AI models, we now stand at the cusp of a new era where generating smooth, realistic videos from textual descriptions isn't just possible—it's becoming increasingly accessible. This blog post is dedicated to demystifying the process of creating such animations, focusing on the synergy between AnimateDiff, a cutting-edge motion modeling tool, and an interpolator known as ST-MFNet. These technologies collectively pave the way for animations that are not just visually appealing but are imbued with a sense of realism that was hard to achieve until now.

AnimateDiff: The Genesis of Motion

At the heart of our discussion lies AnimateDiff, an innovative model that builds upon the capabilities of existing text-to-image models by integrating a motion modeling module. This module, trained on a diverse array of video clips, has mastered the art of capturing the essence of realistic motion dynamics. It empowers Stable Diffusion text-to-image models to breathe life into static images, enabling them to generate animated sequences that range from whimsical anime to lifelike photographs. The magic of AnimateDiff lies in its ability to transform your textual visions into animated realities, opening a whole new realm of possibilities for creators and visionaries.

Mastering Camera Movement

An integral aspect of creating engaging videos lies in the dynamic use of camera movements. To this end, AnimateDiff introduces a groundbreaking approach with the implementation of LoRAs—lightweight, efficient extensions that significantly expedite the fine-tuning process of large models. Originating from the domain of Stable Diffusion models, LoRAs have now been expertly adapted to control the motion module of AnimateDiff. This adaptation has led to the development of eight specialized LoRAs, each designed to simulate specific camera movements, including panning, zooming, and rotating. This customization allows creators to not only visualize but also manipulate their scenes with unprecedented precision, adding depth and dimension to the narrative.

ST-MFNet: Smoothing the Edges

The journey from a static image to a fluid animation requires more than just motion; it demands smoothness. Enter ST-MFNet, a spatio-temporal multi-flow network designed for frame interpolation. This AI marvel works by analyzing the spatial (position) and temporal (time) changes between frames, generating additional frames to enhance the video's fluidity. By employing ST-MFNet with AnimateDiff-generated videos, creators can elevate the frame rate, achieving a level of smoothness that makes the animation indistinguishable from reality. Whether it's transforming a 2-second clip into a high-frame-rate masterpiece or creating a captivating slow-motion effect, ST-MFNet stands as a testament to the possibilities of frame interpolation.

Through this introduction, we aim to not only acquaint you with the tools at your disposal but also inspire you to explore the vast potential that lies in combining AnimateDiff with ST-MFNet. As we delve deeper into the technicalities and creative prospects of these AI-driven models, we invite you to embark on this journey of discovery, where imagination meets innovation, leading to the creation of animated videos that transcend the ordinary. Stay tuned as we explore the intricacies of these models and provide you with the knowledge to harness their full potential.

Overview

In this comprehensive guide, we unveil the synergy between AnimateDiff and ST-MFNet, two cutting-edge technologies that together revolutionize the creation of AI-generated videos. This duo empowers users to transform mere text prompts into dynamic, high-frame-rate videos, complete with realistic motions and customizable camera movements. Whether you're aiming for the fluidity of animation or the precision of a photograph, this guide will navigate you through the process of breathing life into your visions.

AnimateDiff: The Foundation of Motion

At the heart of our video creation process lies AnimateDiff, a pioneering model that extends the capabilities of conventional text-to-image models by incorporating a motion modeling module. This module, refined through extensive training on a diverse array of video clips, is adept at capturing the essence of realistic motion dynamics. This innovative approach allows for the generation of animated outputs that can range from the whimsical charm of anime to the stark realism of photographs. The beauty of AnimateDiff lies in its ability to open a portal to animation for Stable Diffusion text-to-image models, offering an unparalleled level of dynamism and fluidity.

Camera Movement Control: Choreographing Your View

To further enhance the visual storytelling, AnimateDiff introduces the capability to control camera movements through the implementation of LoRAs. Short for Low-Rank Adaptations, LoRAs are efficient solutions to expedite the fine-tuning process of large models without the burden of excessive memory usage. Originally celebrated for their application in Stable Diffusion models, these lightweight model extensions are now ingeniously applied to AnimateDiff's motion module. The original creators of AnimateDiff have meticulously trained eight distinct LoRAs to cater to specific camera movements:

- Pan Up

- Pan Down

- Pan Left

- Pan Right

- Zoom In

- Zoom Out

- Rotate Clockwise

- Rotate Anti-clockwise

These movements can be adjusted in intensity (ranging from 0 to 1) and combined in various ways to achieve the desired visual effect. This flexibility allows creators to precisely choreograph the camera's path, adding a layer of depth and immersion to the animated video.

ST-MFNet: Elevating Frame Rates for Smoothness

To complement AnimateDiff's animation capabilities, ST-MFNet is introduced as a frame interpolator designed to elevate the smoothness of the generated videos. This sophisticated 'spatio-temporal multi-flow network' excels at adding extra frames to a video by analyzing the positional changes of objects and their temporal evolution. By considering multiple potential movements between frames, ST-MFNet effectively increases the frame rate, resulting in a video that's not only smoother but also can be transformed into captivating slow-motion sequences. This tool is especially potent when paired with AnimateDiff videos, allowing for the transformation of a standard 2-second, 16 fps video into a masterpiece of 32 or 64 fps, or even a mesmerizing 4-second slow-motion rendition.

Through this detailed overview, we've explored the synergistic capabilities of AnimateDiff and ST-MFNet in creating AI-generated videos that are not just visually appealing but are smooth, realistic, and fully customizable. This guide serves as your pathway to mastering these innovative tools, enabling you to bring your text-based visions to life in a way that's both dynamic and engaging.

10 Innovative Uses for AnimateDiff and ST-MFNet

Creating animated content and enhancing video quality have never been more accessible thanks to advanced AI tools like AnimateDiff and ST-MFNet. Below, we explore ten creative and practical applications for these technologies, ensuring your projects stand out with smooth, high-definition animations.

Dynamic Storytelling

Craft vivid and engaging narratives by transforming text prompts into animated sequences. AnimateDiff lets you breathe life into your stories, making them more immersive. From fantasy to real-world scenarios, the possibilities are boundless.

Educational Content

Elevate educational materials by incorporating animations that explain complex concepts with ease. Use AnimateDiff to create animated diagrams or scenarios, making learning more interactive and enjoyable.

Marketing and Advertisements

Produce eye-catching ads with smooth transitions and lifelike animations. Utilize ST-MFNet to enhance frame rates, ensuring your advertisements capture and retain viewer attention with their fluidity.

Music Videos

Bring music to life with animated backgrounds or storylines that complement the rhythm and lyrics. AnimateDiff can match the vibe of any genre, adding a visual layer to the auditory experience.

Product Demonstrations

Showcase product features and functionalities through animated demonstrations. High frame rate animations can detail each aspect with clarity, providing a comprehensive view of the product.

Social Media Content

Stand out on social media platforms with animations that pop. Whether it's for Instagram stories or Twitter posts, AnimateDiff and ST-MFNet can help create content that engages and grows your follower base.

Video Game Design

Design video game cutscenes or trailers with custom animations. AnimateDiff allows for the creation of unique characters and environments, while ST-MFNet ensures smooth gameplay previews.

Film and Series Production

Experiment with animated sequences in films or series, whether for opening credits, dream sequences, or entire episodes. These tools offer filmmakers a new avenue for creativity and storytelling.

Art and Digital Installations

Create digital art installations or projections with seamless animations. The combination of AnimateDiff and ST-MFNet can produce mesmerizing visual experiences for galleries, museums, or public spaces.

Personal Projects and Hobbies

For enthusiasts and hobbyists, these tools open up a world of animation and video production. Create personal projects or gifts, like animated greeting cards or short clips, adding a personal touch to your digital creations.

Each of these use cases demonstrates the versatility and potential of AnimateDiff and ST-MFNet in various fields. By harnessing the power of AI for animation and video enhancement, users can push the boundaries of creativity and produce high-quality content with ease.

Utilizing AnimateDiff and ST-MFNet in Python for Video Creation

Creating captivating videos from textual prompts has never been easier, thanks to the integration of AnimateDiff and ST-MFNet. This section will guide you through the process of employing these powerful tools in Python, ensuring that your journey from a simple text prompt to a high-frame-rate video is both smooth and straightforward.

Incorporating AnimateDiff

The first step in our journey involves generating an initial animation using AnimateDiff. This model stands out by augmenting text-to-image models with a sophisticated motion module. This module, trained on numerous video clips, is adept at capturing the essence of motion dynamics, thereby enabling the generation of animated outputs that range from the whimsical charm of anime to the stark realism of photographs.

To embark on this adventure, we'll start by initializing the AnimateDiff model within our Python environment. The process is quite straightforward, as illustrated below:

import replicate

# Initialize Replicate API with your unique token

replicate.init(api_token='YOUR_REPLICATE_API_TOKEN')

print("Generating video with AnimateDiff")

animated_output = replicate.run(

"zsxkib/animate-diff:269a616c8b0c2bbc12fc15fd51bb202b11e94ff0f7786c026aa905305c4ed9fb",

input={"prompt": "a medium shot of a vibrant coral reef with a variety of marine life"}

)

video_url = animated_output[0]

print(video_url)In this snippet, we're utilizing the replicate.run function to invoke the AnimateDiff model with a specific prompt. The result is a URL pointing to the generated video, showcasing a medium shot of a vibrant coral reef teeming with marine life.

Enhancing Smoothness with ST-MFNet

After generating our initial video, the next step involves increasing its smoothness by interpolating additional frames. This is where ST-MFNet, a cutting-edge model specializing in frame interpolation, comes into play. By analyzing changes in both space and time, ST-MFNet adeptly generates extra frames, thus enhancing the fluidity of the video.

The integration of ST-MFNet to interpolate our previously generated video is demonstrated in the following code:

print("Interpolating video with ST-MFNet for enhanced smoothness")

interpolated_videos = replicate.run(

"zsxkib/st-mfnet:2ccdad61a6039a3733d1644d1b71ebf7d03531906007590b8cdd4b051e3fbcd7",

input={"mp4": video_url, "keep_original_duration": True, "framerate_multiplier": 4},

)

smooth_video_url = list(interpolated_videos)[-1]

print(smooth_video_url)In this segment, we're raising the framerate of our initial video by a factor of four without altering its original duration, thanks to the framerate_multiplier and keep_original_duration parameters. The end result is a link to the smoothed video, ready to mesmerize viewers with its enhanced fluidity.

Conclusion

By combining the creative prowess of AnimateDiff with the smoothing capabilities of ST-MFNet, we've demonstrated how to transform a simple text prompt into a visually stunning video. The process, as detailed above, leverages the power of Python and the Replicate API, making it accessible to creators of all skill levels.

Should you embark on this journey of video creation, remember that the key to mastery lies in experimentation. Adjust prompts, experiment with different camera movements, and play around with the interpolation settings. Each tweak opens up new realms of possibility, paving the way for unique and engaging content.

Your feedback and creations are highly valued. Share your experiences and videos with the community to inspire and be inspired. Happy video crafting!

Conclusion

Wrapping Up Your AI Video Creation Journey

Embarking on the journey of creating AI-generated videos can be both exhilarating and challenging. Using tools like AnimateDiff and ST-MFNet, as showcased, opens up a world of possibilities for crafting smooth, high-frame-rate videos directly from text prompts. This process not only enhances the visual experience but also brings your creative visions to life in a way that was previously unimaginable.

Share and Inspire

Upon mastering the art of generating and interpolating videos with these advanced AI models, we strongly encourage you to showcase your work. Sharing your creations can spark inspiration and encourage a community of creators to explore new boundaries of AI-generated content. Whether you prefer the vibrant interactions on Discord or the wide-reaching platforms like Twitter, your videos have the potential to captivate and inspire. Tag us @replicate and let the community marvel at what you've achieved.

Continuous Learning and Exploration

The field of AI video generation is rapidly evolving, with new models and techniques emerging regularly. As you continue your journey, stay curious and open to experimenting with different settings, prompts, and models. The combination of AnimateDiff and ST-MFNet is just the beginning. The future holds endless possibilities for those willing to explore and push the limits of what AI can achieve in video creation.

Engage with the Community

Remember, you're not alone in this endeavor. The community surrounding AI video generation is filled with individuals eager to share their knowledge, exchange tips, and offer support. Engaging with this community can provide valuable insights, help you overcome challenges, and keep you informed about the latest advancements in the field. Whether it's through forums, social media, or dedicated platforms like Replicate's Discord channel, connecting with fellow creators can enrich your journey.

Final Thoughts

As we conclude this guide, we hope it has equipped you with the knowledge and inspiration needed to dive into the world of AI-generated videos. The combination of AnimateDiff, ST-MFNet, and your creativity is a powerful force capable of producing truly stunning visuals. Remember, this is just the beginning of your adventure. Keep exploring, keep creating, and most importantly, keep sharing your creations with the world. The impact of your work extends beyond the videos you create—it inspires a wave of innovation and creativity across the community.

We eagerly await to see the incredible videos you'll bring to life. Happy creating!