Neural Text-to-Speech Synthesis: A Technical Dive into Future Speech Tech

Unveiling Neuroprosthetics in Speech Synthesis: The Next Frontier

The emergence of neuroprosthetics in the sphere of speech synthesis marks a turning point toward an awe-inspiring future where technology bridges the cavernous gap between silence and voice. In this revolutionary phase, spearheaded by the latest developments highlighted in 2023's pivotal research, the amalgamation of neural engineering and text-to-speech (TTS) technologies bears the promise of granting a voice to those who have been without. At the core of this scientific breakthrough is a sophisticated symphony of neural network algorithms and AI, tuned to interpret the complex nuances of the human brain's intent to communicate, thereby rendering audible speech without the need for movement.

For the American university research scientists and software engineers who delve into the depths of TTS API usage and its neural-based development, these advancements are not just a leap – they are a paradigm shift. This new era of neural TTS transcends any traditional boundary previously known in the field, embracing applications that range from aiding individuals with severe speech impediments to enhancing virtual interactions in educational, medical, and entertainment platforms. What surfaces is a canvas painted with the broad strokes of potential, inviting innovation with open arms and challenging pioneers of technology to create solutions that are both scientifically significant and deeply human.

| Topics | Discussions |

|---|---|

| Introduction to Neuroprosthetic Speech Systems | An exploration into the cutting-edge domain of neuroprosthetics, which is revolutionizing speech synthesis through brain-computer interfaces. |

| "A High-Performance Neuroprosthesis for Speech Decoding and Avatar Control" | Detailed insight into the neuroprosthetic development aimed at decoding speech and controlling avatars, paving new ways for assistive communication. |

| Implications for Assistive Communication Technologies | Discussion on how recent neuroprosthetic innovations may influence future assistive technologies and enhance communication for individuals with speech impairments. |

| The Future of Neural Speech Synthesis | Forecasting the advancement of speech synthesis with neural networks and its potential applications and impact on numerous sectors. |

| Common Questions Re: Neural TTS | A curated Q&A addressing the most common curiosities about neural speech synthesis and AI's expanding role in developing TTS technology. |

Introduction to Neuroprosthetic Speech Systems

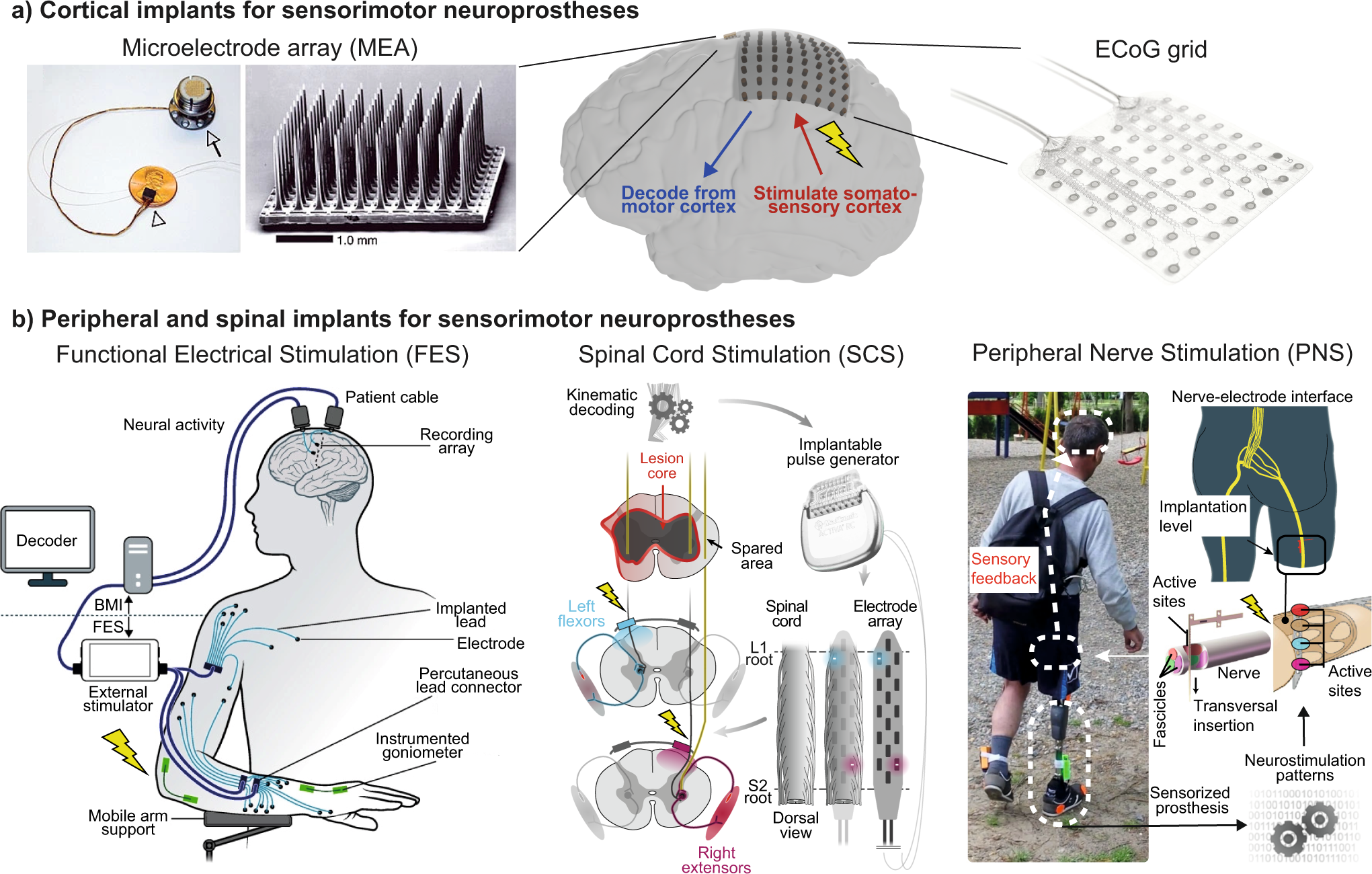

The field of neuroprosthetics has brought forth a new chapter in speech synthesis technologies, bridging cutting-edge neural research with practical communication solutions. At this intersection of neuroscience and engineering, key terms define the parameters and capabilities of such advanced systems. Understanding this terminology is paramount for professionals designing and implementing these technologies, as it provides context to the intricate mechanics and the profound outcomes of neuroprosthetic speech systems.

| Term | Definition |

|---|---|

| Neuroprosthetics | Assistive devices that connect to the nervous system to replace or augment lost functions, such as speech, due to injury or disease. |

| Speech Synthesis | The technological process of converting written text into audible speech, aiming to replicate natural human speech patterns. |

| Neural Networks | Computational models, inspired by the human brain, designed to recognize patterns and interpret data through a structure of interconnected nodes. |

| Artificial Intelligence (AI) | Field of computer science dedicated to creating machines capable of performing tasks that would typically require human intelligence. |

| Deep Learning | An AI function that imitates the workings of the human brain in processing data for use in detecting objects, recognizing speech, translating languages, and making decisions. |

| Text-to-Speech (TTS) | A form of assistive technology that reads digital text aloud, often used to support individuals with visual impairments or reading disabilities. |

| Communication Disorders | Conditions that impede normal speech, language, and communication, ranging from hearing impairment to complex cognitive issues. |

| Avatar Control | The use of computer-generated characters or ‘avatars’ that can be controlled by neuroprosthetic interfaces, often used in virtual environments or rehabilitation scenarios. |

"A High-Performance Neuroprosthesis for Speech Decoding and Avatar Control"

In the study "A high-performance neuroprosthesis for speech decoding and avatar control", published on August 23, 2023, researchers unveiled a neuroprosthetic system that marks a significant leap in assistive speech technology. This research, led by a team that includes Sean L. Metzger and Edward F. Chang, offers an elaborate examination of how leveraging the speech cortex can help those with severe motor impairments to communicate. The system deciphers neural signals associated with speech intention and translates them into articulate, synthesized speech using advanced decoding algorithms.

Metzger, Littlejohn, Silva, Moses, and colleagues have surmounted previous limitations, achieving a level of spoken and gestural articulation through avatars that represents meaningful strides in neuroengineering. They have effectively created a platform where people with paralysis can express themselves—a crucial tool that fosters autonomy and human connection. The detailed technical exploration and results of their work carry implications that resonate across disciplines, standing as a testament to what is possible at the confluence of neural science and speech synthesis.

The publication of such research by notable institutions and investigatory teams underscores the collective drive toward technological advancements that enhance lives. Recognition shown by the scholarly community—evident from its impactful 28k accesses, 12 citations, and a significant Altmetric score of 3377—highlights the relevance and transformative nature of this project in the realm of neural prosthetics and beyond.

Implications for Assistive Communication Technologies

The findings from the research on neuroprosthetic systems for speech decoding are profoundly impactful for the field of assistive communication technologies. Such systems exemplify how bioengineering and tech can converge to create solutions that fundamentally alter communication paradigms for individuals with paralysis. Enhanced by meticulous neural network training and sophisticated decoding algorithms, the neuroprosthetic devices in question provide users not only with the ability to generate speech but also to control digital avatars. This dual capability indicates a future where individuals can be virtually present and communicative in ways previously unattainable.

Beyond the technical prowess, these developments raise important considerations about accessibility and the quality of life for those affected by speech-impairing conditions. The potential for wide-scale implementation of such neuroprosthetics could lead to more inclusive environments, both online and in-person. The real-world applications of these technologies span across various scenarios, from assisting speech-impaired individuals in everyday communication to offering new avenues for creative expression through avatar-mediated interactions.

As the research gets translated into real-world applications, the social implications, ethics, and policy-making surrounding such assistive technologies will warrant thoughtful discourse and action. Equipping individuals with the means to communicate when they otherwise could not, signifies tremendous respect for human dignity and autonomy, underscoring technology's role not just as a tool but as a bridge to fuller participation in society.

The Future of Neural Speech Synthesis

Unreal Speech is championing the cost-effective revolution of text-to-speech (TTS) tech with an API designed to reduce expenses without compromising the natural sound quality. Academic researchers who often operate within fixed budgets are finding in Unreal Speech a TTS solution that allows for greater experimentation and breadth of study. Software engineers, tasked with implementing TTS functionality into applications, can benefit from the API's affordability, letting them invest more resources into other development areas.

Game developers look to Unreal Speech to integrate TTS without the historically high costs associated with realistic voice generation, thus enhancing gameplay and narrative immersion. Educators are provided a means to support students with disabilities, delivering a variety of spoken content that could aid learning, thanks to the substantial volume of characters included in Unreal Speech's economical plans.

While the service currently emphasizes English voices, its development road map indicates expanding to multilingual support, pertinent for global application. This commitment to growth and accessibility is reinforced by the API's intuitive features such as per-word timestamps for precise synchronization in speech, which is crucial for myriad use cases extending from virtual assistance to multimedia production.

Common Questions Re: Neural TTS

Explaining the Essence of Neural Speech Synthesis

Neural speech synthesis represents the frontier where TTS technologies replicate the intricacies of human communication. By harnessing neural networks, this advanced form of synthesis emulates natural language modalities, capturing the nuances characteristic of human speech, such as emotional inflection and context-specific intonation.

Comparing Neural TTS to Traditional TTS Technologies

The advent of neural TTS marks a stark evolution from traditional TTS methods. Past approaches often pieced together pre-recorded speech segments, resulting in more mechanical-sounding outputs. Neural TTS, however, synthesizes speech algorithmically in real-time, producing a fluid and lifelike auditory experience that mirrors genuine human interaction.

Diving Into AI's Role in Transforming Text Into Speech

AI transforms the landscape of TTS by leveraging complex algorithms that comprehend the contextual essence of language. Through understanding tone, inflection, and subtleties, AI generates speech that conveys the intended meanings, emotions, and accents inherent in the text, bringing a depth to digital speech that echoes authentic human conversation.