OpenAI Function Calling

Introduction

The OpenAI API stands out for its adeptness at crafting responses in a consistent and systematic way, truly simplifying the process of building language-based applications. It's almost like having a digital assistant at your fingertips that can handle complex prompts and fine-tune the outputs with minimal coding effort from you.

That being said, it's interesting to note that even seasoned developers and engineers, who usually have their hands deep in structured data, sometimes find themselves at a bit of a crossroads with the OpenAI API. The crux of the issue lies in the inherent nature of unstructured data, like the text strings that the API generates and consumes. These aren't as straightforward as the neat columns and rows of data they're used to.

To carve out meaningful information from these sprawling text strings and to ensure that the results are consistent and reliable, these professionals often turn to the trusty yet complex tools of regular expressions (RegEx) or the nuanced art of prompt engineering. It's a bit like trying to find patterns in a starry sky—possible, but it requires some skill and a lot of patience.

But here's the good news: OpenAI has rolled out a nifty function calling feature for its newer models like GPT-3.5 and GPT-4. It's a game-changer because it lets users input their own defined functions right into the mix. Imagine being able to ask the API to not just generate text but to process it in a specific, structured way that you decide. This feature can turn the wild text strings into neatly organized data, all without the developers having to wrestle with RegEx or spend hours crafting the perfect prompt. It's like giving them a magic wand to transform the unstructured into the structured, making their lives a whole lot easier.

In this tutorial, we will learn how OpenAI function calling can help resolve common problems caused by irregular model outputs.

USing OpenAI with function calling

We would generate response using OpenAI gpt-3.5-turbo without function calling to test for consistency.

The code for this tutorial would be in our Github snippets repository for you to reference to.

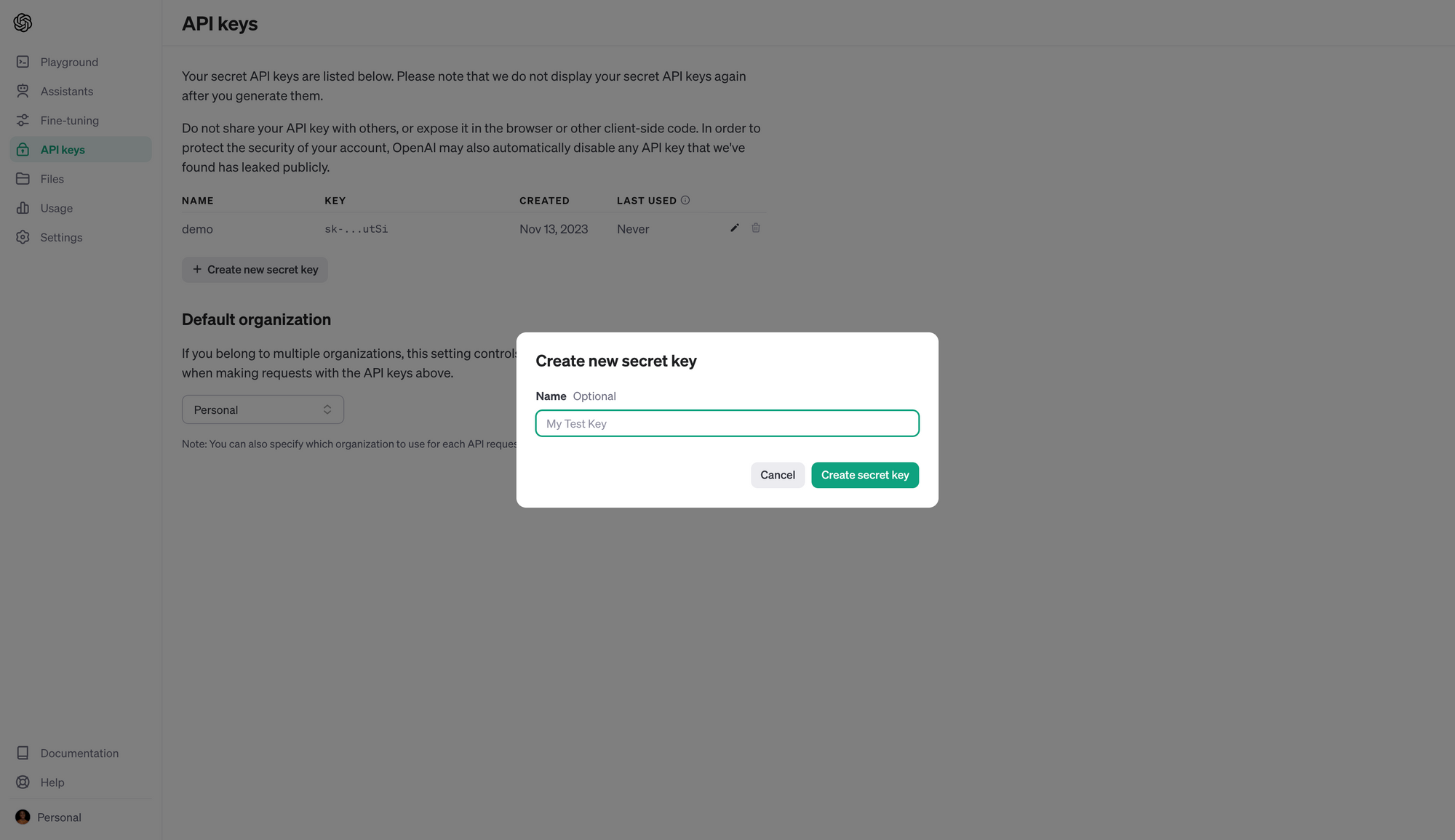

The first thing we need to do is heard over to OpenAI and get our Open AI Key, after we have gotten our API Key the next thing is setup our dev environment.

We are going to be using python and the OpenAI SDK, with that been said lets install and setup a virtual environment and then install our dependencies.

Open your favorite code editor or IDE, create a new project and after you are done creating a new project.

Use this command to setup your virtual environment, note that I am using a Linux terminal it could be different for windows

pip install virtualenv

# Creating an environment

virtualenv venv

# Activate your virtualenv environment

source venv/bin/activate

After you have gotten your API Key and also setup your virtual environment, lets install some dependencies now.

pip install openai python-dotenv

Python dotenv would be used for loading our API Key stored in .env file

Problem Overview

OpenAI has released a new capability for their models through the API, called "Function Calling." The intent is to help make it far easier to extract structured, deterministic, information from an otherwise unstructured and non-deterministic language model like GPT-4.

This task of structuring and getting deterministic outputs from a language model has so far been a very difficult task, and has been the subject of much research. Usually the approach is to keep trying various pre-prompts and few shot learning examples until you find one that at least works. While doable, the end result was clunky and not very reliable. Now though, you can use the function calling capability to build out quite powerful programs. Essentially, adding intelligence to your programs.

Imagine you want to be able to intelligently handle for a bunch of different types of input, but also something like: "What's the weather like in Boston?

The task now, given this natural language input to GPT-4 would be:

- To identify if the user is seeking weather information

- If they are, extract the location from their input

So if the user said "Hello, how are you today?", we wouldn't need to run the function or try to extract a location.

But, if the user said something like: "What's the weather like in Boston?" then we want to identify the desire to get the weather and extract the location "Boston" from the input.

Previously, you might pass this input to the OpenAI API like:

completion = openai.ChatCompletion.create(

model="gpt-3.5-turbo-0613",

messages=[{"role": "user", "content": "What's the weather like in Boston?"}],

)

And then you'd access the response via:

reply_content = completion.choices[0].message.content

print(reply_content)

Output:

I am an AI language model and I cannot provide real-time information. To check the current weather in Boston, please refer to a weather website or app, such as weather.com, AccuWeather, or use a voice assistant like Google Assistant or Siri.

As you can see, this isn't quite what we would want to happen in this scenario! While GPT-4 may not currently be able to access the internet for us, we could conceivably do this ourselves, but we still would need to identify the intent, as well as the particular desired location. Imagine we have a function like:

def get_current_weather(location, unit="fahrenheit"):

"""Get the current weather in a given location"""

weather_info = {

"location": location,

"temperature": "72",

"unit": unit,

"forecast": ["sunny", "windy"],

}

return json.dumps(weather_info)

This is just a placeholder type of function to show how this all ties together, but it could be anything here. Extracting the intent and location could be done with a preprompt, and this is sort of how OpenAI is doing it through the API, but the model has been trained for us with a particular structure, so we can use this to save a lot of R&D time to find the "best prompt" to get it done.

To do this, we want to make sure that we're using version gpt-3.5-turbo-0613 or higher. Then, we can pass a new functions parameter to the ChatCompletion call like:

completion = openai.ChatCompletion.create(

model="gpt-3.5-turbo-0613",

messages=[{"role": "user", "content": "What's the weather like in Boston?"}],

functions=[

{

"name": "get_current_weather",

"description": "Get the current weather in a given location",

"parameters": {

"type": "object",

"properties": {

"location": {

"type": "string",

"description": "The city and state, e.g. San Francisco, CA",

},

"unit": {"type": "string", "enum": ["celsius", "fahrenheit"]},

},

"required": ["location"],

},

}

],

function_call="auto",

)

The first thing to note is the function_call="auto", part. This will let GPT-4 determine if it should seek to fulfill the function parameters. You can also set it to none to force no function to be detected, and finally you can set it to seek parameters for a specific function by doing something like function_call={"name": "get_current_weather"}. There are many instances where it could be hard for GPT-4 to determine if a function should be run, so being able to force it to run if you know it should be is actually very powerful, which I'll show soon.

Beyond this, we name and describe the function, then describe the parameters that we'd hope to pass to this function. GPT-4 is relying on this description to help identify what it is you want, so try to be as clear as possible here. The API is going to return to you a json structured object, and this is how you structure your function description, which affords you quite a bit of flexibility in how you describe/structure this functionality.

Okay let's see how GPT-4 responds to our new prompt

reply_content = completion.choices[0]

reply_content

<OpenAIObject at 0x7f7d42ef3e50>

JSON: { "finish_reason": "function_call", "index": 0, "message": { "content": null,

"function_call":

{ "arguments": "{\n \"location\": \"Boston\"\n}", "name": "get_current_weather" },

"role": "assistant"

}

}

This time, we don't actually have any message content. We instead have an identified function_call for the function named get_current_weather and we have the parameters that were extracted from the input, in this case the location, which is accurately detected as Boston by GPT-4.

We can convert this OpenAI object to a more familiar Python dict by doing:

reply_content = completion.choices[0].message

funcs = reply_content.to_dict()['function_call']['arguments']

funcs = json.loads(funcs)

print(funcs)

print(funcs['location'])

Output:

{'location': 'Boston'}

Boston

Not only can we extract information or intent from a user's input, we can also extract structured data from GPT-4 in a response.

For example here, I've been working on a project, called TermGPT, to create terminal commands to satisfy a user's query for doing engineering/programming.

Imagine in this scenario, you have user input like: "How do I install Tensorflow for my GPU?"

In this case, we'd get a useful natural language response from GPT-4, but it wouldn't be structured as JUST terminal commands that could be run. We have an intent that could be extracted from here, but the commands themselves need to be determined by GPT-4.

With the function calling capability, we can do this by passing a function description like:

functions=[

{

"name": "get_commands",

"description": "Get a list of bash commands on an Ubuntu machine to run",

"parameters": {

"type": "object",

"properties": {

"commands": {

"type": "array",

"items": {

"type": "string",

"description": "A terminal command string"

},

"description": "List of terminal command strings to be executed"

}

},

"required": ["commands"]

}

}

This is my first attempt at a description and structure, it's likely there are even better ones, for reasons I'll explain shortly. In this case, the name for the function will be "get_commands" and then we describe it as Get a list of bash commands on an Ubuntu machine to run. Then, we specify the parameter as an "array" (list in python), and this array contains items, where each "item" is a terminal command string, and the description of this list is List of terminal command strings to be executed.

Now, let's see how GPT-4 responds to this prompt:

example_user_input = "How do I install Tensorflow for my GPU?"

completion = openai.ChatCompletion.create(

model="gpt-4-0613",

messages=[{"role": "user", "content": example_user_input}],

functions=[

{

"name": "get_commands",

"description": "Get a list of bash commands on an Ubuntu machine",

"parameters": {

"type": "object",

"properties": {

"commands": {

"type": "array",

"items": {

"type": "string",

"description": "A terminal command string"

},

"description": "List of terminal command strings to be executed"

}

},

"required": ["commands"]

}

}

],

function_call="auto",

)

reply_content = completion.choices[0].message

reply_content

Output:

<OpenAIObject at 0x7f7d42f02450> JSON: {

"content": "To install Tensorflow for your GPU, you would normally follow these steps:\n\n1. First, Install the Python software on your machine. You can download it from the official Python website. Tensorflow supports Python versions 3.5 to 3.8.\n\n2. Make sure pip, Python\u2019s package manager, is upgraded to the latest version:\n\n```\npip install --upgrade pip\n```\n\n3. Install the Tensorflow GPU package using pip. Tensorflow also offers a CPU-only package for users who do not have a compatible GPU:\n\n```\npip install tensorflow-gpu\n```\n\n4. Before using the GPU version of Tensorflow, you need to install GPU drivers. You can download them from the NVIDIA website.\n\n5. Finally, install the CUDA Toolkit and the cuDNN SDK. These are software platforms from NVIDIA needed for GPU-accelerated applications. You can also download these from the NVIDIA website.\n\nNote, these instructions are general and the exact approach may vary depending on your setup and environment. You should also ensure your machine meets the specific system requirements of Tensorflow.\n\nDisclaimer: \n\nPlease note that GPU support for Tensorflow requires NVIDIA\u00ae GPU card with CUDA\u00ae Compute Capability 3.5 or higher. The Tesla Architecture is no longer supported. MAC OS is not supported for GPU usage. GPUs using the Ampere Architecture (Compute Capability 8.6) can use CUDA 11.0 after a compatible up-to-date driver is installed.\n\nFor complete instruction, please visit the official TensorFlow website [here](https://www.tensorflow.org/install/gpu).",

"role": "assistant"

}

In this case, I set function_call to be "auto." In this case, it's fairly hard to GPT-4 to determine that the intent was to run this function. I suspect this is caused by the difference between extracting info from a user's input vs structuring a response. That said, I am quite sure that we could adjust the names/descriptions for our function call to be far more successful here.

But, even when this auto version fails, we do have the ability to "nudge" GPT-4 to do it anyway by setting function_call to be {"name": "your_function"}. This will force GPT-4 to run the function, even if it doesn't think it should or doesn't realize it.

Output:

<OpenAIObject at 0x7f7d42ebd720> JSON: {

"content": null,

"function_call": {

"arguments": "{\n \"commands\": [\n \"sudo apt update\",\n \"sudo apt install python3-dev python3-venv\",\n \"python3 -m venv tensorflow-gpu\",\n \"source tensorflow-gpu/bin/activate\",\n \"pip install --upgrade pip\",\n \"pip install tensorflow-gpu\"\n ]\n}",

"name": "get_commands"

},

"role": "assistant"

}

Now, we do get the function call, and we can grab those commands with:

funcs = reply_content.to_dict()['function_call']['arguments']

funcs = json.loads(funcs)

funcs['commands']

Output:

[

'sudo apt update',

'sudo apt install python3-dev python3-venv',

'python3 -m venv tensorflow-gpu',

'source tensorflow-gpu/bin/activate',

'pip install --upgrade pip',

'pip install tensorflow-gpu'

]

I just can't express how powerful this is. We can now extract structured data from GPT-4, and we can also pass structured data to GPT-4 to have it generate a response. Being able to do this reliably is basically never before seen, and this will make this sort of interaction between deterministic programming logic and non-deterministic language models far more common and just plain possible. The ability to do this is going to be a game changer for the field of AI and programming, and I'm very excited to see what people do with this capability.

Here's another example of how powerful this could be. We could generate responses for a given query in a variety of "personalities" so to speak:

example_user_input = "Is it safe to drink water from a dehumidifer?"

completion = openai.ChatCompletion.create(

model="gpt-4-0613",

messages=[{"role": "user", "content": example_user_input}],

functions=[

{

"name": "get_varied_personality_responses",

"description": "ingest the various personality responses",

"parameters": {

"type": "object",

"properties": {

"sassy_and_sarcastic": {

"type": "string",

"description": "A sassy and sarcastic version of the response to a user's query",

},

"happy_and_helpful": {

"type": "string",

"description": "A happy and helpful version of the response to a user's query",

},

},

"required": ["sassy_and_sarcastic", "happy_and_helpful"],

},

}

],

function_call={"name": "get_varied_personality_responses"},

)

reply_content = completion.choices[0].message

reply_content

Output:

<OpenAIObject at 0x7f7d42ec8720> JSON: {

"content": null,

"function_call": {

"arguments": "{\n\"sassy_and_sarcastic\": \"Oh sure, if you fancy a little microbial cocktail, go right ahead. But seriously, don't. Dehumidifiers aren't designed to purify water for drinking. It could be full of bacteria, mold, and other nasties. Drink from a tap, not your dehumidifier. It is not safe.\",\n\"happy_and_helpful\": \"I'm sorry, but it's not recommended to drink water from a dehumidifier. While it's extracting water from air, dehumidifiers don't filter or clean the water. This means it can contain various bacteria or even chemicals. To keep safe, it's better to only drink water that's meant for consumption and has been treated properly!\"}",

"name": "get_varied_personality_responses"

},

"role": "assistant"

}

response_options = reply_content.to_dict()['function_call']['arguments']

options = json.loads(response_options)

options["sassy_and_sarcastic"]

Output:

Oh sure, if you fancy a little microbial cocktail, go right ahead. But seriously, don't. Dehumidifiers aren't designed to purify water for drinking. It could be full of bacteria, mold, and other nasties. Drink from a tap, not your dehumidifier. It is not safe.

options["happy_and_helpful"]

Output:

I'm sorry, but it's not recommended to drink water from a dehumidifier. While it's extracting water from air, dehumidifiers don't filter or clean the water. This means it can contain various bacteria or even chemicals. To keep safe, it's better to only drink water that's meant for consumption and has been treated properly!

Hopefully that gives you some ideas about what's possible here, but this is truly 0.000001% of what's actually possible here. There's going to be some incredible things built with this capability.

Conclusion:

The introduction of function calling by OpenAI marks a significant advancement for developers in the realm of AI application development. This feature enables models such as GPT-3.5 and GPT-4 to produce structured JSON data via bespoke functions, effectively addressing the challenges associated with erratic and unreliable text outputs.

With function calling, developers can tap into external web APIs, conduct custom SQL queries, and construct robust AI applications. This capability allows for the efficient extraction of pertinent information from text, ensuring uniform responses for both API and SQL commands.

Throughout this tutorial, we have explored the innovative function calling feature offered by OpenAI. We've delved into its application for generating consistent outputs, the creation of various functions, and some use cases. I hope you enjoyed this tutorial, stay tuned in for more exciting contents.