Exploring the 2023 Speech Engine Evolution: Advancements in TTS and AI

Advancing Human-Computer Dialogue: The Progress of Speech Engines in 2023

In 2023, the world of human-computer interaction stands on the brink of a new dawn, courtesy of revolutionary strides in speech engine technology. Artificial Intelligence (AI), particularly Deep Learning (DL), has been the catalyst in this dramatic evolution, propelling the capabilities of Text-to-Speech (TTS) systems to unprecedented heights. Today's speech engines go beyond mere voice commands; they encapsulate accurate transcription, intuit translation across languages, and deliver more human-like interactions than ever before. These advancements are primarily fuelled by DL algorithms that have infused TTS technology with an ability to mimic the natural prosody and cadence of human speech, thereby bridging the gap between digital utterances and human conversation.

Nowadays, technicians can harness these state-of-the-art TTS systems to create voice-enabled devices that understand and respond in a manner akin to a human counterpart. The implications for industries such as customer service, digital content creation, and international business are vast, enabling seamless integration across diverse linguistic landscapes. The latest breakthroughs in machine translation also signify a leap towards a future where language barriers disintegrate, fostering a realm of global collaboration. These speech technologies not only redefine virtual assistants but also promise greater accessibility, inclusivity, and effectiveness in how we exchange ideas and information.

| Topics | Discussions |

|---|---|

| Overview of Speech Technology Progress | Examine the remarkable strides and advancements made in speech recognition and processing technologies in recent years. |

| The 2023 State of Speech Engines | Insight into the current state of speech engines, highlighting improvements in accuracy and the resulting enhancement of human-device interactions. |

| The Role of Deep Learning in TTS Enhancement | Delve into the contribution of deep learning algorithms in advancing the realism and naturalness of TTS, making voice interactions more intuitive. |

| Technical Guides for TTS Development | Detailed guides and code samples for developers interested in implementing cutting-edge deep learning techniques in TTS systems. |

| Breakthroughs in Machine Translation | Explore the latest innovations in machine translation technology that enhance communication across different languages and their global impact. |

| Common Questions Re: Speech Technology | Answers to pressing questions about neural TTS work, the driving technology behind TTS, and the advancement of AI in rendering TTS as natural as human speech. |

Overview of Speech Technology Progress

As we navigate the sophisticated realm of speech technology, it's imperative to acquaint ourselves with the foundational terminology that shapes our discussions and comprehension. From the intricate algorithms that analyze spoken language to the engines that transform text into lifelike speech, understanding these key terms will enhance our grasp of the nuances embedded within this transformative field. Let us embark on this linguistic journey equipped with a glossary that unpacks the core components and technologies paving the way for the next frontier in speech recognition and synthesis.

Artificial Intelligence (AI): The simulation of human intelligence in machines that are programmed to think like humans and mimic their actions.

Deep Learning (DL): A subset of machine learning in AI that uses networks capable of learning unsupervised from data that is unstructured or unlabeled.

Text-to-Speech (TTS): A form of speech synthesis that converts text into spoken voice output, typically using synthetic voice.

Speech Recognition: The ability of machines to recognize and respond to spoken language.

Machine Translation: The use of software to translate text or speech from one language to another.

Speech Engine: Software designed to recognize, interpret, and generate human speech.

Transcription: The process of converting speech into written or printed text.

Naturalness: In the context of TTS, it refers to how closely the synthesized speech resembles natural human speech in terms of prosody and pronunciation.

Prosody: The patterns of stress and intonation in a language, which in speech technology helps determine the natural flow of synthesized speech.

The 2023 State of Speech Engines

In a comprehensive overview by Erik J. Martin, the "The 2023 State of Speech Engines" article, published on February 8, 2023, encapsulates the significant milestones achieved in the domain of speech recognition technologies. Amey Dharwadker, a machine learning tech lead at Meta, emphasizes the pivotal advancements in automated transcription and translation, reflecting the heightened preciseness and capabilities of contemporary speech engines. Elevated accuracy in these areas signals considerable progress and demonstrates the successful application of research efforts to practical, high-impact tasks in both personal and professional settings.

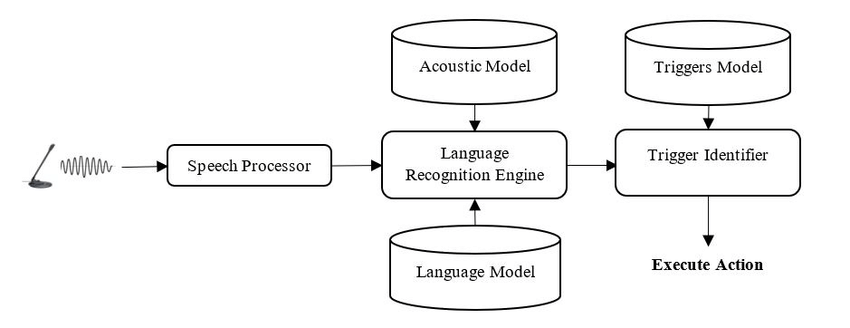

The essence of the recent advancements lies in the utilization of DL algorithms, which have dramatically enhanced the realism and naturalness of TTS. This technological leap has endowed speech engines with the ability to generate speech patterns that closely approximate human voice interactions, allowing for more organic exchanges between individuals and voice-responsive technologies. These developments are not mere incremental upgrades but represent substantial enhancements that have reshaped the utility and user experience of voice-enabled systems across sectors.

Pioneering work in machine translation heralds another area of innovation that profoundly influences multilingual communication and collaboration on a global scale. The ability of speech engines to facilitate fluid multi-language conversations points to significant implications for industries dependent on global interactions, such as customer support and multinational business operations. The collective impact of these technological advancements, occurring cohesively in the speech technology landscape, sets the stage for a future where digital communication transcends previous limitations with a promise of inclusivity and effective worldwide engagement.

The Role of Deep Learning in TTS Enhancement

Deep Learning (DL), a subset of machine learning that imitates the way humans gain certain types of knowledge, is pivotal in enhancing how Text-to-Speech (TTS) systems generate human-like speech. DL models, such as neural networks, analyze vast datasets of human speech and learn to reproduce the intricacies of natural language, from accents and intonations to emotional inflections. Through this process, TTS technology has seen a transformation, with synthetic voices becoming more dynamic and expressive, closely mirroring the variations found in day-to-day human speech.

These DL-driven advancements enable TTS engines to offer personalized voice experiences, adapting to user preferences or mimicking specific voices with greater fidelity. The adaptability of DL models means that they can be trained for specialized applications, such as providing assistive communication for individuals with speech impairments or generating voiceovers in multiple languages for global media content.

The success of DL in TTS is not only a triumph of technology but also a beacon for accessibility, as the improved naturalness of synthesized speech erases barriers, allowing all individuals to interact with technology with ease. With each passing year, as DL algorithms grow more sophisticated, the gap between synthetic and natural human speech narrows, setting a course towards unprecedented levels of realism in computer-generated voice.

Technical Guides for TTS Development

Implementing DL Algorithms in TTS Systems

Implementing Deep Learning (DL) algorithms into Text-to-Speech (TTS) systems involves a multi-step process that includes dataset preparation, model selection, training, and iterative refinement. You typically start by collecting a diverse dataset of spoken language that captures the phonetic and prosodic variety of speech. Then, you may choose a DL model, like a Convolutional Neural Network (CNN) or Recurrent Neural Network (RNN), suitable for audio processing and generation. Afterward, you proceed to train the model using a backpropagation algorithm and a loss function apt for sequence generation, such as the Connectionist Temporal Classification (CTC) loss for sequence learning.

It's not possible to provide detailed code samples without knowing the specific DL framework or TTS system architecture in use, but the overarching steps remain consistent across various implementations.

Building More Natural TTS Voices with Python and Java

Building natural-sounding TTS voices with programming languages like Python and Java has become more accessible thanks to libraries and APIs tailored to DL and TTS. In Python, libraries such as TensorFlow or PyTorch allow for developing complex DL models that can generate lifelike speech. These models can be integrated into TTS systems using APIs like the gTTS library for Python, which acts as an interface to Google's TTS services.

Similarly, Java developers might leverage DL4J or other DL frameworks in conjunction with TTS libraries like FreeTTS to build systems that convert text to natural-sounding speech. Each language offers its own set of tools and libraries that make it feasible to develop TTS systems capable of producing high-quality synthetic voices.

Since TTS requires handling of large audio datasets and intensive computational tasks, developers should be mindful of the models' training time, data preprocessing, and the selection of suitable hardware that can handle intensive DL tasks efficiently.

Breakthroughs in Machine Translation

Machine translation has seen transformative developments, underpinned by the ever-improving text-to-speech (TTS) synthesis APIs like those offered by Unreal Speech. With claims of cost reductions up to 90% compared to industry counterparts such as Eleven Labs and Play.ht, and up to half the price of tech giants like Amazon, Microsoft, and Google, Unreal Speech positions itself as a highly economical solution for synthesizing human-like speech. These cost advantages extend to the domain of machine translation as well, as the ability to convert text across languages with high accuracy and at a reduced price is indispensable.

Academic researchers who utilize TTS technology for generating datasets or conducting linguistic studies can significantly cut down on budgetary constraints with Unreal Speech. Similarly, software engineers and game developers can leverage Unreal's API for creating responsive and realistic voice interactions within apps and games, offering highly scalable solutions that can process thousands of pages per hour without loss in quality or performance.

For educators, the high quality and cost-effectiveness of Unreal Speech open up new possibilities in creating immersive learning experiences. The ability to produce natural-sounding voiceovers for educational content becomes a powerful tool for inclusivity, catering to students with diverse learning preferences and needs. The Unreal Speech API's flexibility in voice selection, pitch, speed, bitrate, and codec options, ensures a tailored audio experience, facilitating a more engaging and versatile teaching environment.

Common Questions Re: Speech Technology

Behind the Scenes: How Is Neural TTS Refining Voice Interactions?

Neural Text-to-Speech (TTS) is refining voice interactions by utilizing neural network architectures that mimic the nuances of human speech patterns, resulting in more dynamic and lifelike voice synthesis.

What Innovations Are Powering Today's TTS Systems?

Today's Text-to-Speech systems are powered by advanced deep learning algorithms that process and convert text data into speech output that closely resembles human voice, with improvements in intonation, stress, and rhythm.

Will the Latest AI Make TTS Indistinguishable from Human Speech?

The latest AI advancements strive to make TTS indistinguishable from human speech by enhancing the naturalness and expressiveness of the synthesized voice, although achieving complete indistinguishability remains challenging.