Revolutionizing Human-Machine Interactions: Microsoft's Advances in Neural TTS

The quest for creating a seamless dialogue between man and machine has long been a pursuit of modern technology. With Microsoft's recent advancements in Neural Text-to-Speech (TTS) synthesis, we're witnessing a paradigm shift in this dialogue. Neural TTS uses sophisticated Deep Learning algorithms to produce voices so lifelike they're often indistinguishable from actual human speech. The nuances of tone, the rhythm of speech, and the subtle inflections that convey meaning—are now being replicated with astonishing accuracy, thanks to the deep neural networks (DNNs) at the core of this technology.

Incorporating Azure AI's Neural TTS into applications promises a transformative experience—text-to-audiobooks, in-car navigation systems, and virtual assistants are set to become more intuitive and engaging. The ramifications are profound: from reducing the cognitive load for users, to creating more natural and inclusive interactions across numerous domains. This cutting-edge technology is readily available in preview mode, offering developers and innovators a glimpse into the next generation of TTS, and inviting feedback to refine the Human AI exchange further. Its integration taps into an array of longtail opportunities like 'neural text to speech technology online' and 'neural text to speech free', ensuring a wider horizon for its application.

| Topics | Discussions |

|---|---|

| Technological Advancements in Neural Text-to-Speech Synthesis | Explore the latest deep neural networks innovations that are elevating the TTS experience to an unprecedented level of human-like quality. |

| Microsoft's New Neural Text-To-Speech Service Helps Machines Speak Like People | Discover how Microsoft's neural TTS, demonstrated at the Ignite conference, is making strides towards more natural machine-human communication. |

| Seamless Integration of Neural TTS in Various Applications | Learn about the practical applications of neural TTS in transforming digital text into vibrant speech across platforms and devices. |

| Getting Started With Neural TTS: Technical Quickstart Guides | Dive into hands-on quickstart tutorials that guide you through integrating neural TTS capabilities into your own applications. |

| Enhancing Natural Interactions: User-Centric Applications of TTS | Understand how neural TTS is paving the way for more engaging and human-centric user interactions within digital products. |

| Common Questions Re: Neural Text-to-Speech | Answers to the most commonly asked questions about neural text-to-speech, focusing on how AI generates lifelike voices and the technology behind the most realistic TTS. |

Technological Advancements in Neural Text-to-Speech Synthesis

The ever-evolving landscape of TTS is marked by increasingly sophisticated technology that blurs the lines between synthetic and human voices. Here's a glossary of key terms at the forefront of neural TTS advancements that will illuminate our discussion on the profound innovations, transforming text to speech as we know it.

Neural TTS: Refers to text-to-speech systems that utilize neural networks to generate speech that mimics the human voice, including its intricacies and emotions.

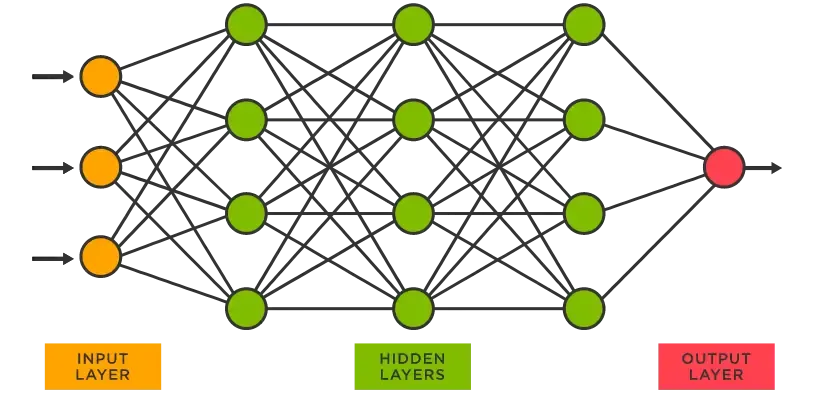

Deep Neural Networks (DNNs): Complex neural network architectures with multiple hidden layers that model high-level abstractions in data and are critical in realistic voice synthesis.

Prosody: The rhythm, stress, and intonation of speech that contribute to the naturalness and expressiveness of the synthesized voice.

Azure Cognitive Services: A collection of machine learning algorithms and APIs from Microsoft Azure designed to enable developers to integrate AI capabilities, including neural TTS.

Synthetic Speech: Artificially generated human speech that is produced by a computer, typically with the aim of emulating natural human speech patterns.

Text-to-Audiobooks: The process of converting digital text into spoken narration, using TTS technology to produce audiobooks.

Conversational AI: Advanced form of AI that allows for natural and flowing interactions between humans and machines through spoken language.

Speech Services: Part of Azure Cognitive Services, these tools provide capabilities like speech to text and TTS, including neural TTS technologies.

Microsoft's New Neural Text-To-Speech Service Helps Machines Speak Like People

The frontier of artificial speech synthesis has been significantly expanded by Microsoft's Neural Text-to-Speech (TTS) service. Leveraging Deep Neural Networks (DNNs), Microsoft's researchers have fine-tuned the acoustic nuances, creating synthesized voices that are nearly identical to human speech. With this innovation, the TTS generates natural prosody and clear articulation, diminishing the once-common listener fatigue and enhancing the overall user interaction with AI systems. Posted on September 24, 2018, by Microsoft Azure, this milestone demonstrates Microsoft's dedication and continuous pursuit in advancing cognitive services.

The technology's public unveiling occurred at the Microsoft Ignite conference, where the service's real-world applications were highlighted. They promise to improve experiences across an array of sectors including audiobooks and automotive industry interfaces. By incorporating neural TTS into Azure Cognitive Services' Speech Services, Microsoft has enabled developers and technology aficionados to access the technology in its preview stage—a call to further perfect the system through widespread feedback and testing.

Microsoft's investment into neural TTS transcends mere technological exhibition; it aims to redefine the way we engage with machines on a daily basis. While the project's genesis and progress have been closely guarded by the tech giant, its overall impact in reading text aloud is expected to be felt across the software. This includes the most popular kinds of TTS, as well as neural TTS online platforms. A significant step forward, this technology embodies Microsoft's commitment to shaping a future where interactions with digital assistants and navigational systems feel more intuitive and human.

Previewing the Future: Neural TTS at Microsoft Ignite

The Microsoft Ignite conference served as a key platform to showcase the advancements in Neural TTS technology. Attendees obtained a firsthand experience of how far synthetic voice synthesis has evolved, marking a future where digital assistants and conversational interfaces are expected to be significantly more human-like in their responsiveness and tonality.

Azure Cognitive Services: A Sneak Peek into Speech Services Preview

By integrating Neural TTS into Azure Cognitive Services' Speech Services, Microsoft has provided eager developers with the tools to push the boundaries of lifelike synthetic speech. The preview phase is not only a glimpse into the potential of Neural TTS but also an invitation for developer community involvement to refine technology that stands to elevate countless applications requiring natural voice interactions.

Seamless Integration of Neural TTS in Various Applications

Neural Text-to-Speech (TTS) technology has ushered in a new era of voice synthesis with the capability to be integrated seamlessly into a multitude of applications, altering traditional narratives about how machines communicate. By delivering highly realistic speech patterns, neural TTS finds its place in expanding the functionalities of chatbots, making them significantly more relational and effective in their interactions. The ability to streamline synthesized dialogue that is virtually indistinguishable from human speech is not limited to chatbots but also extends to virtual assistants, effectively enhancing user experiences.

Beyond the realms of virtual assistance and customer service, neural TTS is revolutionizing the publishing industry by transforming written content into engaging audio formats. The implications here are immense: from bringing books to life for the visually impaired to creating immersive storytelling experiences for audiobook enthusiasts. Similarly, the automotive industry is now on the cusp of integrating more intuitive and responsive navigation systems that speak to drivers with clear, natural-sounding directions, thus augmenting travel safety and comfort.

The versatility of neural TTS technology is further underscored by its potential for multilingual support, crucial in today’s globalized society. It holds the promise of breaking down language barriers, facilitating better understanding and inclusion for diverse populations. Consequently, neural TTS is setting the stage for a more inclusive and linguistically diverse digital age, where the ability to communicate effectively across different languages becomes a reality for all segments of society.

Getting Started with Neural TTS: Technical Quickstart Guides

Implementing Neural TTS in Python: A Step-by-Step Guide

Python remains one of the most versatile programming languages and is renowned for its ease of use in building applications — including those for Neural TTS. A starting point for implementing neural TTS is using the Azure Cognitive Services Speech SDK. The following snippet demonstrates how you can convert text to speech in Python using this SDK.

Below is a basic example of how to use the Azure Cognitive Services Speech SDK with Python to make a simple Text-to-Speech request:

import azure.cognitiveservices.speech as speechsdk

Set up the subscription info for the Azure Speech Service:

subscription_key = "YourSubscriptionKey"

service_region = "YourServiceRegion"

Set up the speech configuration using the subscription info:

speech_config = speechsdk.SpeechConfig(subscription=subscription_key, region=service_region)

Enable neural voice, this is optional and depends on availability in the region:

speech_config.speech_synthesis_voice_name = "en-US-JessaNeural"

Create a speech synthesizer with the given settings:

speech_synthesizer = speechsdk.SpeechSynthesizer(speech_config=speech_config)

Get a text from the user to synthesize:

text_to_synthesize = input("Enter some text that you want to synthesize: ")

Synthesize the text:

result = speech_synthesizer.speak_text_async(text_to_synthesize).get()

Check the result:

if result.reason == speechsdk.ResultReason.SynthesizingAudioCompleted:

print("Speech synthesized for the text: "{}"".format(text_to_synthesize))

elif result.reason == speechsdk.ResultReason.Canceled:

cancellation_details = result.cancellation_details

print("Speech synthesis canceled: {}".format(cancellation_details.reason))

if cancellation_details.reason == speechsdk.CancellationReason.Error:

print("Error details: {}".format(cancellation_details.error_details))

This code will prompt the user for text input and synthesize speech from the text using Azure's neural TTS service. It is also set up to handle errors that may occur during the process. Remember, the subscription key and service region must be replaced with your actual Azure subscription details.

Integrating Neural TTS with Java and JavaScript: Code Samples

Java and JavaScript are potent technologies that can also leverage Azure Cognitive Services for Neural TTS integration. Here’s a basic guide to help you get started with incorporating neural TTS into your Java or JavaScript applications.

For Java applications, the following example shows how to synthesize speech using the Azure Speech SDK for Java:

const axios = require('axios');

const fs = require('fs');

const headers = {

'Authorization': 'Bearer YOUR_API_KEY',

};

const data = {

'Text': '<YOUR_TEXT>', // Up to 1,000 characters

'VoiceId': '<VOICE_ID>', // Scarlett, Dan, Liv, Will, Amy

'Bitrate': '192k', // 320k, 256k, 192k, ...

'Speed': '0', // -1.0 to 1.0

'Pitch': '1', // 0.5 to 1.5

'Codec': 'libmp3lame', // libmp3lame or pcm_mulaw

};

axios({

method: 'post',

url: 'https://api.v6.unrealspeech.com/stream',

headers: headers,

data: data,

responseType: 'stream'

}).then(function (response) {

response.data.pipe(fs.createWriteStream('audio.mp3'))

});For JavaScript applications that run in the browser, you might use the Web Speech API for implementing TTS functionalities. This API is native to modern browsers and does not require an Azure subscription. Here's a simple example of how you can use it:

const synth = window.speechSynthesis;

const inputForm = document.querySelector('form');

const inputTxt = document.querySelector('input');

inputForm.onsubmit = function(event) {

event.preventDefault();

const utterThis = new SpeechSynthesisUtterance(inputTxt.value);

synth.speak(utterThis);

inputTxt.blur();

}

Remember to check compatibility since the Web Speech API may not be supported in all browsers or may have different levels of support for various languages and voices, including neural ones.

Enhancing Natural Interactions: User-Centric Applications of TTS

The world of text-to-speech (TTS) synthesis has experienced a sea of change with the advent of Unreal Speech, offering an API that significantly undercuts the costs traditionally associated with TTS services. With savings of up to 90% compared to competitors like Eleven Labs and Play.ht, and up to 50% savings on services from Amazon, Microsoft, and Google, Unreal Speech has carved out a niche that prides itself on affordability without compromising on quality, as echoed by CEO Derek Pankaew of Listening.com.

In the context of academic research, software engineering, game development, and education, Unreal Speech presents an enticing proposition. For researchers, the ability to convert large volumes of text to audio can enhance the accessibility of their work, making it available to wider audiences, including those with visual impairments. Similarly, software engineers can now experiment and integrate high-volume TTS capabilities into their applications at a fraction of the cost, potentially increasing the scope and reach of their software solutions.

Game developers are granted the power to create more immersive and responsive audio experiences with high-quality TTS, while educators can harness Unreal Speech to turn written material into spoken content, thus accommodating diverse learning styles and needs. With such a competitive advantage, coupled with features like low latency (0.3s), high uptime (99.9%), and generous monthly character limits (625M for the Enterprise Plan), Unreal Speech is well-positioned to revolutionize the TTS arena. Furthermore, the simplicity of the API, along with its quickstart code samples in Python, Node.js, and React Native, speak volumes about its user-friendliness and immediate application.

Common Questions Re: Neural Text-to-Speech

How Does Microsoft's Neural TTS Create Lifelike Speech?

Microsoft's neural Text-to-Speech (TTS) employs deep neural networks to produce voices that are nearly indistinguishable from human speech, offering improved prosody and clear articulation, resulting in more natural interactions.

What Powers the Most Realistic Text-to-Speech Voices?

The most realistic text-to-speech voices are powered by advanced deep learning algorithms and neural network technologies that analyze and simulate human-like speech patterns.

How Is AI Transforming Text into Natural-Sounding Speech?

AI transforms text into natural-sounding speech by understanding the context and nuances of the language and using machine learning to generate speech that mimics the tonality and rhythm of human communication.